Back to Blog

AIAI Audits

Cognitive Psychology Reveals LLM Vulnerabilities: AI Security Foundations

Introduction

Welcome to "The Model: From a Security Perspective" - Part 1 of our deep dive into AI security.

Just as mastering networking fundamentals is essential for cybersecurity expertise, understanding how AI models work is the foundation for AI security and jailbreaking. This series will transform you from someone who sees AI as an invincible black box into a "model bender" who understands its vulnerabilities.

What you'll gain:

- A hacker's mindset for reverse engineering AI models

- Understanding of how information flows through neural networks

- Knowledge of why even mathematically perfect models can fail

- Insight into the unsolved problems that create attack vectors

We'll start with cognitive psychology - exploring how AI models mirror human thinking - then dive into the mathematical foundations that power (and limit) these systems.

Expansive Analogy of an AI Model: The Baby Child

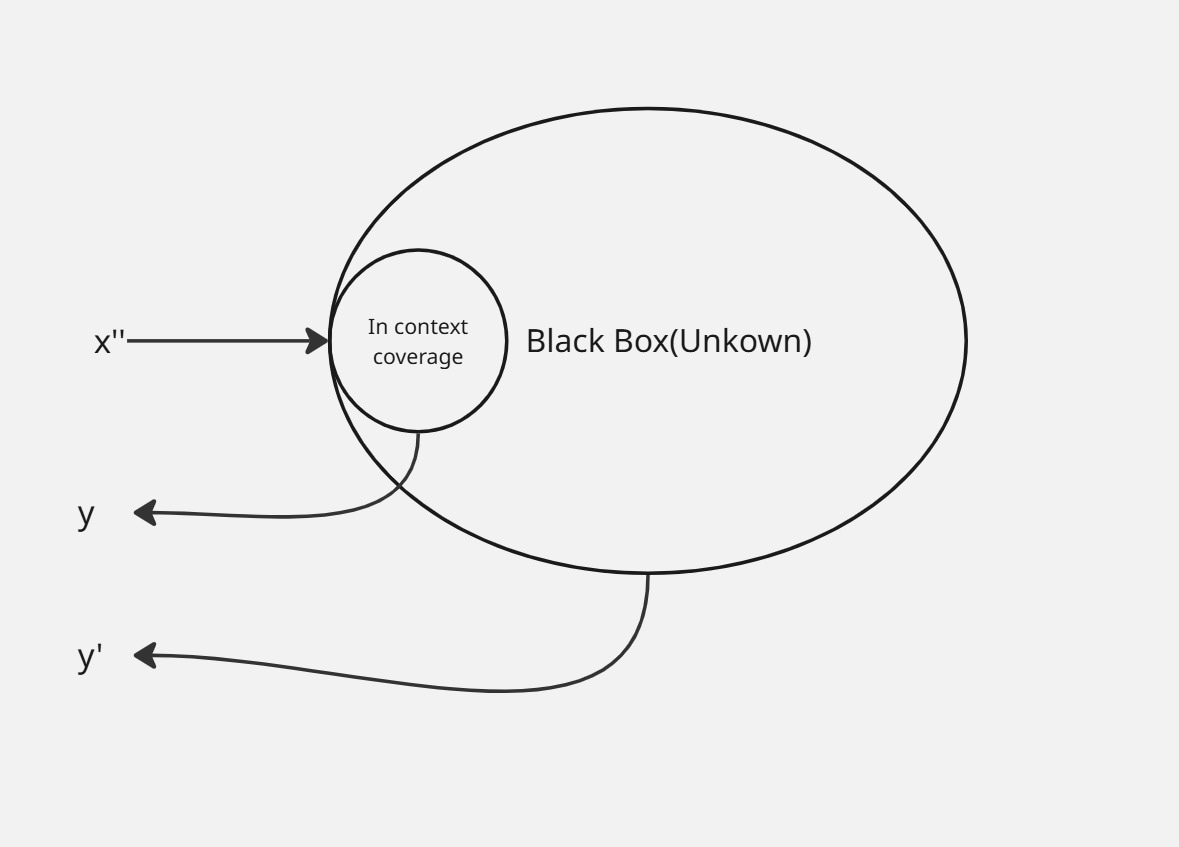

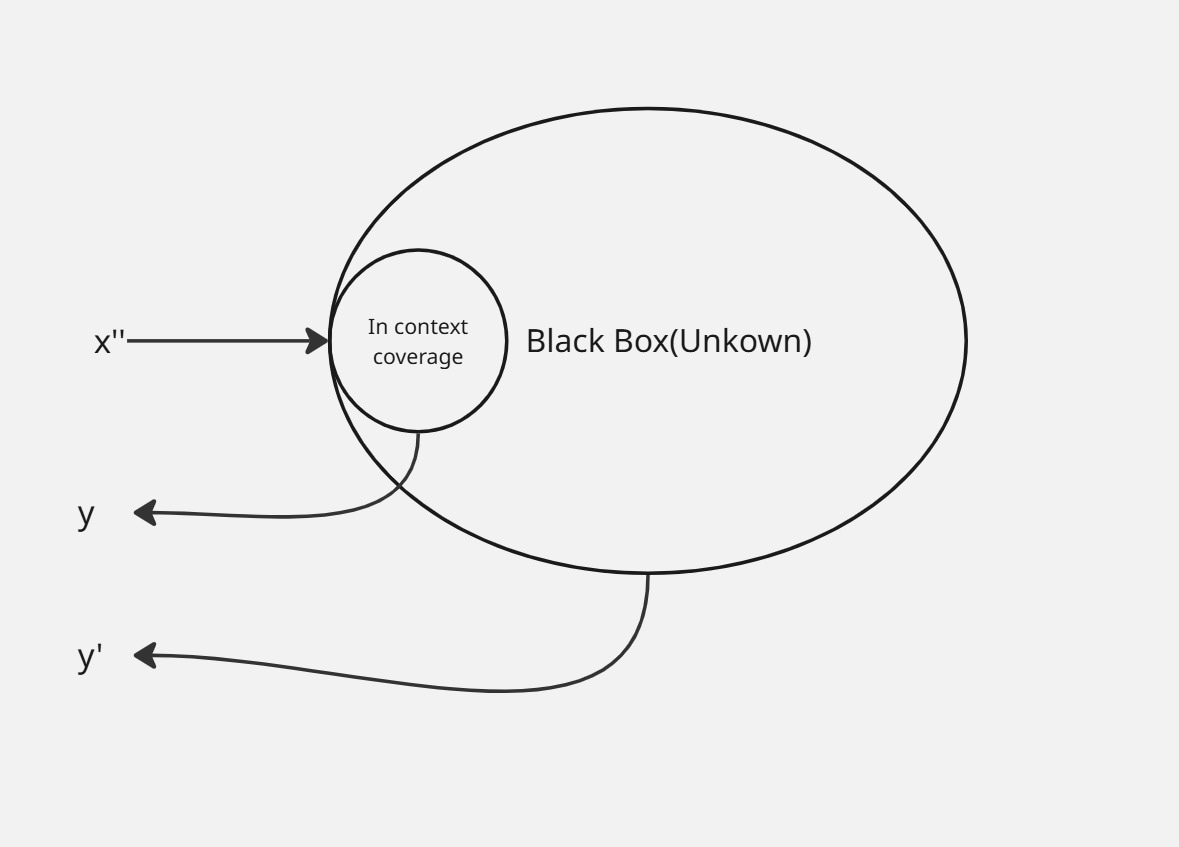

Applying the first principle, an AI model is simply an advanced pattern recognition system leveraging it predictions based on trained data to provide the best possible output for an input.

Following the above definition, a model can be dump or smart based on trained data and recognized pattern. Learning pattern is a foundational key in modern AI systems and human learning in general. Think of a model as a newborn child. Some models are designed to be functionally powerful over others. This is the same for children who might have genetical advantage over others. However, what makes some children become smarter than their peers is based on the influence of their environment. The same way an ordinary child can surpass a born genius(if not utilized) through the help of a more conducive learning environment and experience, a less mathematical functionally powered model can surpass a powerful model with the help of an accurate and well labelled data.

From the illustration above, an identical AI model can be fascinating or more dump based on the influence of data quality. You can simply view an untrained model as a formatted brain. It doesn’t understand basic to complex input unless trained with similar sets of information. The same way a grown native English speaking child can’t understand or speak Spanish fluently based on languages influence from birth, a model can’t provide correct output outside the context of it trained data. From this analogy, you can now understand that a model is only powerful(accurate) within it trained data context. outside this context, the possible output isn’t deterministic or accurate.

From the illustrative diagram above, if you feed an input x’’ into a model system, the system will give a predictable output of y if the input is within it context, else, the system will provide an arbitrary output of y’’ if the input is out of it knowledge context.

From a security perspective, we can only break a model with what we can control. Data is only what we can control. It’s time you know the data science behind a model if you hope to stand a chance.

How Information is Processed: The Thinking Machine

Models are trained with data, thus understanding how data is processed is critical.

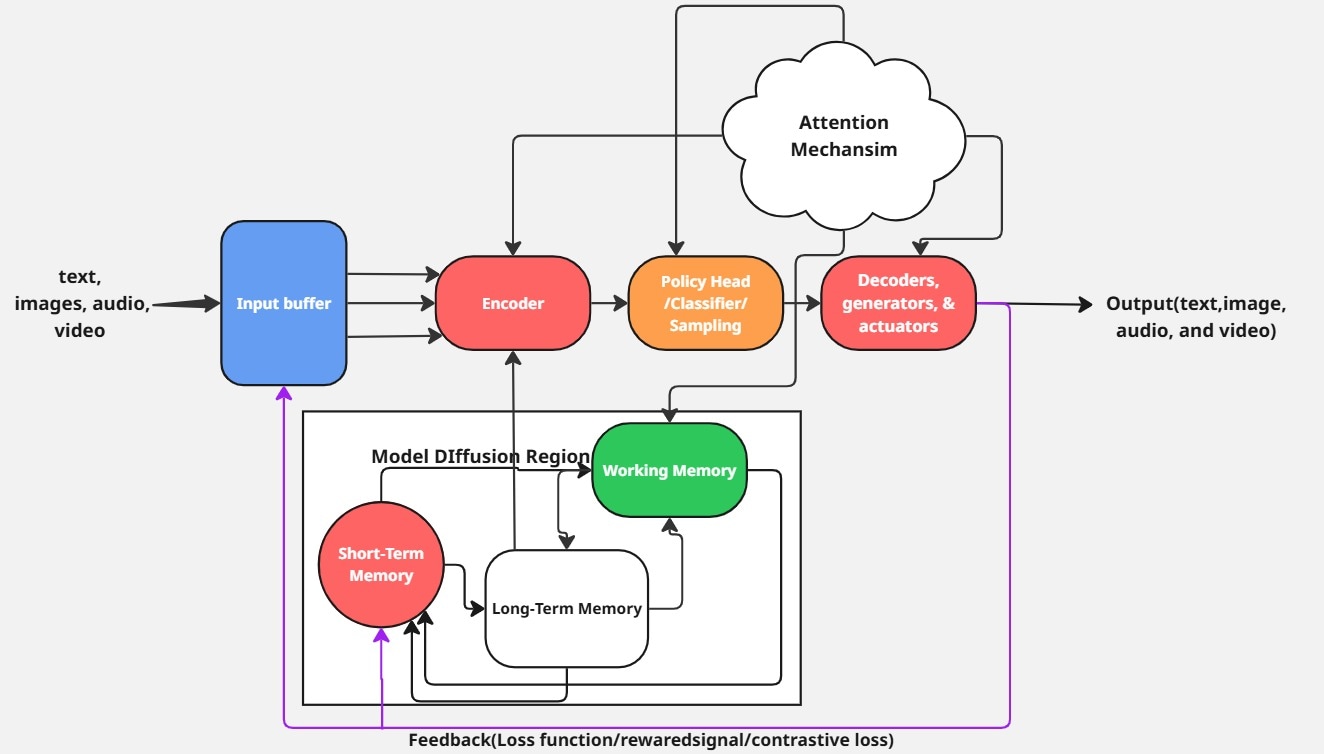

In skeletal setting, a model is simply a complex mathematical function. When a model is trained with data(image, text, audio), this information is processed into parameters. In summary, the model processes information by transforming input data into output data through many layers of mathematical functions.

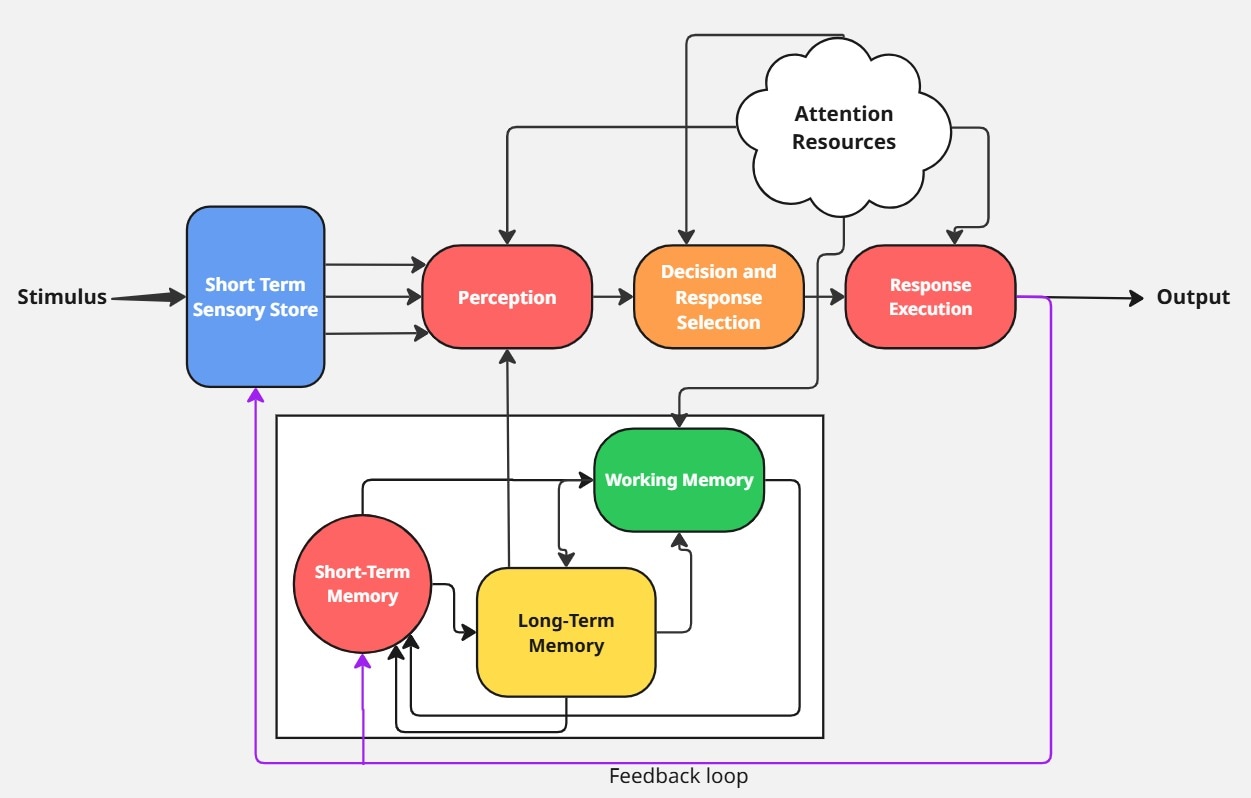

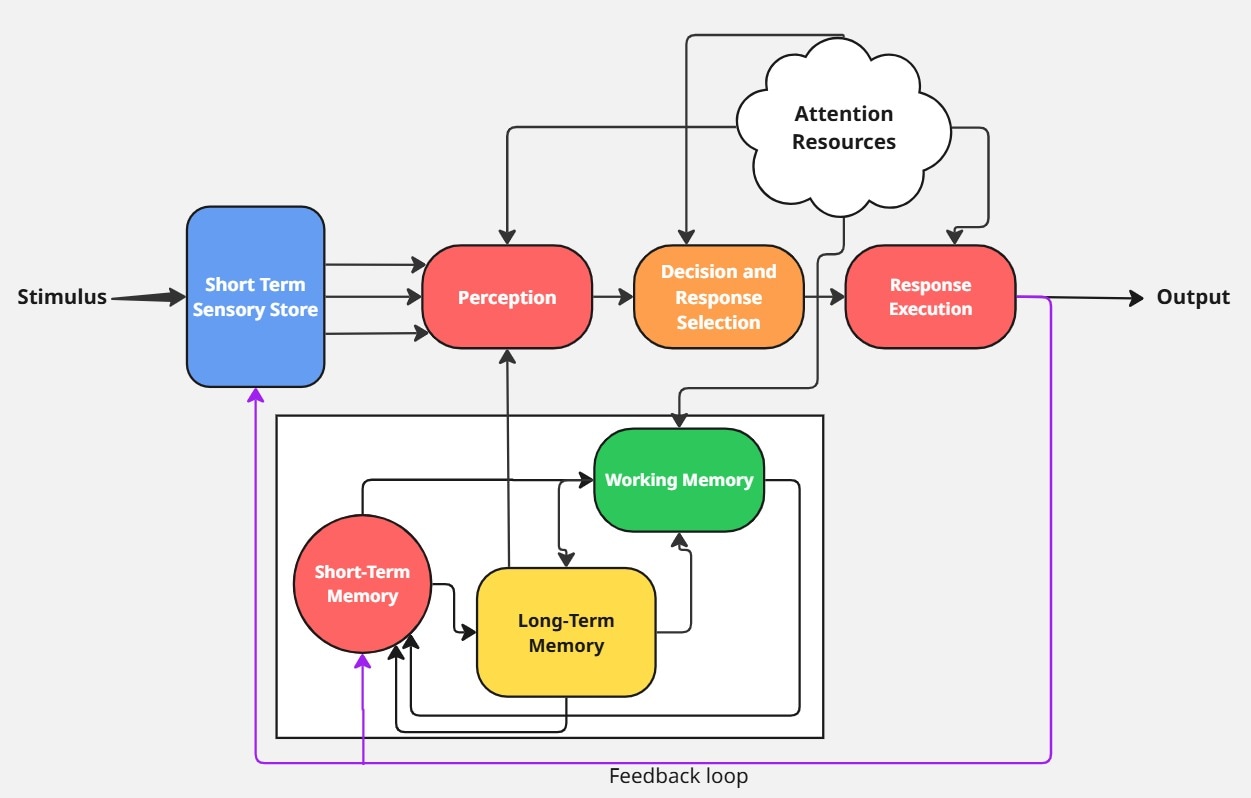

We are going to analyze the cognitive information processing model for humans thinking and how this concept is applied in modern AI systems.

The cognitive model below is a cognitive model inspired by Wicken’s Information Processing Model. This model is the base model for modern day AI systems. We will use this diagram as an inspiration to further explain how different AI systems processes information.

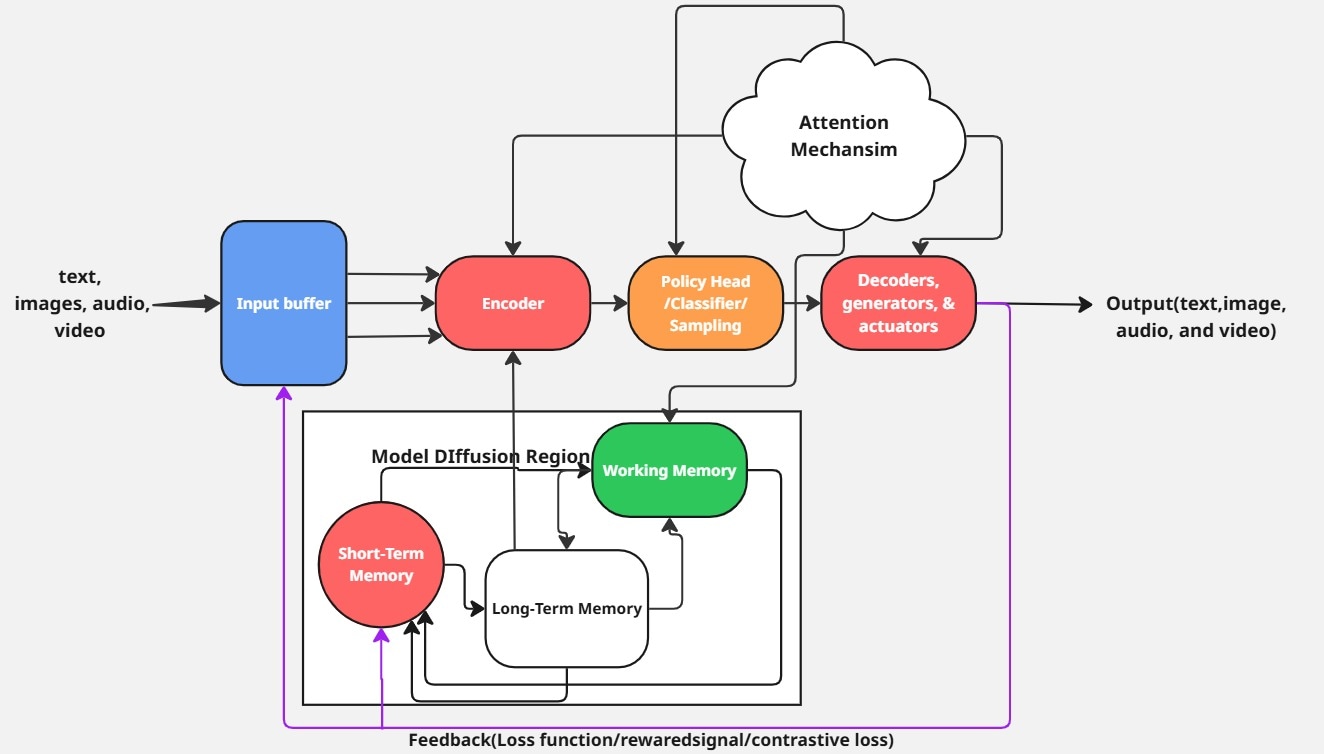

The cognitive model above describes the data flow in modern AI systems. This model is the basic architecture governing the development of different modern thinking machines.

To deepen your understanding of the modern day AI system, let decouple this cognitive architectures to see how the modern day thinking machines works.

Reverse Engineering Model Components

We are going to break down the different component in the cognitive model and their relationships with the modern day AI Machine.

What You'll Learn in This Section:This comprehensive breakdown covers 10 core components that bridge human cognition and AI systems:Input Processing:

- Stimuli - How AI systems receive and process inputs

- Short-Term Sensory Storage - Input buffers and preprocessing pipelines

- Perception - Feature extraction and encoding layers

Cognitive Processing:

- Attention Resources - Attention mechanisms and focus control

- Decision & Response Selection - Policy heads and probabilistic sampling

- Working Memory - Context windows and temporary processing

Memory Systems:

- Long-Term Memory - Neural network weights and knowledge storage

- Short-Term Memory - Conversational context and temporary retention

Output & Learning:

- Response Execution - Decoders and output generation

- Feedback/Learning - Loss functions and model improvement

Each component shows you exactly how human cognitive processes map to AI architectures, revealing the security implications and attack vectors at every stage.

Input Processing

1. Stimuli(Inputs):

From the human cognitive model, stimuli are sensory inputs which are sounds, visuals and touch. This inputs are represented in AI systems as images, videos and text. The incoming inputs in human and LLM(Large Language Model) systems move directly to the STSS/Input Buffer.

2. Short-Term Sensory Storage(Input Buffers and Preprocessing Pipelines):

In humans, we have the STSS, which is basically the sensory registers(memory). This acts as a temporary buffer allowing the brain to filter and transfer relevant data to short-term memory for further processing.

A reason you don't tend to react to all series of incidents(mixed stimulus) is because your brain only select stimuli you know are harmful, interesting, benefitting or attractive. This explains the reason you quickly remember to process most incidents, messages and videos related to your interest. Depending on the influence of this contents, they can reflect in your short term memory for a season or permanently stick for long-time in your long-term memory.

Back to AI systems, this concept is the same for AI systems. AI systems have Input Buffers or Preprocessing Pipelines temporarily holding inputs before feeding them to neural networks. Most times you noticed LLMs responding to only certain input messages in a very long content by filtering input types. This is a mimic of the human thinking system. The LLM only picks relevant inputs in a very large input messages depending on the size of input buffer or processing pipelines.

Just as STSS sometimes decay in humans due to overloaded information, the AI buffer applies sliding window or pruning to discard old data in the buffer(mostly applied in video processing). The inserted inputs in STSS/input buffer quickly moves to be encoded in perception layers.

3. Perception(Feature Extraction/Embedded Layers/Encoders):

Perception is simply the process of interpreting and giving meaning to certain stimuli (inputs). It is an essential factor in human cognitive thinking in reacting to our environment.

This component is mimicked in modern AI systems as encoders, Feature Extractors and Embedded Layers e.g. convolutional/transformer encoders. The AI model applies the perception principle by filtering and encoding different information media(text, video, audio, and image) into numerical or vector forms. In AI systems, encoding inputs demands algebraic and vectorized computations.

Analyzing from a security perspective, the first malicious bypass happens in this stage. To understand more of the interconnections, let's head to computational mechanism, i.e., attention resources.

Cognitive Processing

4. Attention Resources(Attention Mechanism):

The attention resources is a core component of the human cognitive system. In human cognitive model, attention resources tunes the brain to focus on information while filtering and ignoring extraneous ones based on it capacity. This is a reason you are mentally overwhelmed with multiple task in parallel, thus have to pick more prioritized limited tasks. Although way more complex functions happens here, this is the ultimate function.

In modern AI systems, this mechanism principle still holds. In AI systems, the part of the neural network responsible for this process assigns her weights to relevant input features while suppressing less relevant ones. This is a major reason why AI systems don't give full detailed response to all your prompts requirements.

The same way humans suffer attention disorder deficiencies diseases such as ADHD, TBI, Schizophrenia, ADHD and more, models suffer related problems such as Attention Collapse(Context Blindness), Loop Inference(over focus on one token), Noisy attention weights and attention degradation over long context windows(a reason why some AI systems have max limits input tokens). Lot's of interconnection goes on between the model attention mechanism and the other model neural layer components and memory.

5. Decision & Response Selection(Policy Head/Classification Layer/SoftMax Sampling):

In this model component, lots of mathematical probabilistic theories is carried out. It is heavily Influenced by attention resources(attention mechanism). When inputs flows to this stage, the neural network policy component based on systems models priority, selects current encoded perception inputs. This is commonly associated with models like AlphaGo, AlphaZero and autonomous systems.

Note, this is done after the SoftMax sampling function converts the classified processed inputs(categories) into probabilistic distribution to enable probabilistic based decision making. This selection is commonly based on system overall knowledge context as both memory, perception, decision and execution are influenced by attention resources.

6. Working Memory(MANNs/Cross-Attention Layers/Internal Controllers/State Representation and Replay/Hidden & Cell State/ Context Window):

This is where perception feeds in interpreted data. In AI systems, it is where encoded data are analyzed and interpreted to get correct meaning. Many LLM systems, neural networks, transformer architectures, and memory-augmented networks mirrors some of the human working memory.

The capacity of the working memory is finite. A reason why you can't handle tens of parallel tasks thrown at you without giving yourself a break to tackle each task sequentially, though you know all the tasks.

Though AI systems mimic this by using more GPU computational power and designs to process multiple information in parallel, information processing is limited through GPU computations(physical limitation) and transformer context windows e.g. max sequence length. This is due to computational limit. The working memory is heavily influenced by factors such as attention resources(attention mechanism) and different memory systems basically embeddings and trained parameters in the AI model.

Memory Systems

7. Long-Term Memory(Neural Network Weights/Knowledgebase/Database): From Wicken's cognitive model, the LTM is divided into different stored knowledge repository. The following is a classification with its AI systems and LLM equivalents/Analogies:

Declarative Memory (Conscious, Explicit Knowledge):

| Memory Type | Human Function | AI Implementation | Examples |

|---|---|---|---|

| Semantic Memory | Languages, rules, facts, meanings | Knowledge Bases, LLMs, RAG | BERT, RAG, Grok 3 |

| Episodic Memory | Experience, events, context | Memory Networks, External Memory | DNC, Memory Networks, MANNs |

| Meta Declarative | Confidence, awareness | Self-reflective AI, Uncertainty models | Bayesian Networks, DeepSearch |

Semantic Memory:

This memory handles languages, rules, facts and meanings just as the name implies, “semantics”. It is further grouped into the following:

- Factual Knowledge

- Conceptual Framework

- Language and Meaning

In AI systems, this memory behaviors are mimicked in Knowledge Bases, LLMs and RAG using the key mechanism of encoded weights and retrieval augmented generation e.g., BERT(Bidirectional Encoder Representations from Transformers), RAG, Grok 3 etc.

Episodic Memory:

This memory is responsible for experience, events and context. This is further grouped into the following:

4. Autobiographical events

5. Temporal Context

6. Emotional Association

This memory type is simulated in MANNs(Memory-Augmented Neural Networks), Memory Networks using external memory stores and context retrieval e.g., DNC(Differential Neural Computers), Memory Networks, and Grok 3 memory.

Meta Declarative:

This is responsible for confidence and awareness. Its further divided into the following:

- Source Memory

- Meta-Memory

This long-term memory type is applicable in self-reflective AI systems and uncertainty models using confidence estimation and self-monitoring. Examples are seen in Bayesian Neural Networks(BNNs), Grok 3 and DeepSearch.

Non-Declarative Memory (Unconscious, Implicit Skills):

| Memory Type | Human Function | AI Implementation | Examples |

|---|---|---|---|

| Procedural Memory | Skills, sequences, habits | Reinforcement Learning, Fine-tuned models | AlphaGo, Fine-tuned BERT |

| Priming & Perceptual | Feature reuse, pattern recognition | Transfer Learning, Self-supervised | ViT, SimCLR, Grok 3 |

| Conditioning | Reward-based learning, stimulus response | RL, Associative Learning | DQN, RLHF, Hebbian Networks |

| Habituation | Noise filtering, adaptive forgetting | Attention, Regularization | EWC, Transformer attention |

Procedural Memory:

This type of memory is responsible for skills, sequences and habits.

This non-declarative memory type is applied in Reinforcement Learning and Fine-Tuned Models for policy optimization and task specific weights. Models such as AlphaGo, Fine-Tuned BERT and Robotic Reinforcement Learning adopts this memory technique.

Priming and Perceptual Learning:

This memory method is heavily mimic in Transfer Learning and Self-Supervised Learning through feature reuse and contrastive learning. It is applied in ViT(Vision Transformer), SimCLR(a self-supervised framework for visual representation learning using contrastive methods), Grok 3 contextual processing applies this memory technique.

Conditioning(Classical/Operant):

This memory simulation is governed by RL(Reinforcement Learning) and Associative Learning in AI systems through reward based learning and stimulus response. Systems such as DQN(Deep Q-Network), RLHF(Reinforcement Learning from Human Feedback), and Hebbian Networks(a network that strengthens specific learning patterns) leverage this memory methodology.

Habituation:

This memory classification behavior can be noticed in attention, regularization and continual learning of AI systems through noise filtering and adaptive forgetting in transformers, EWC(Elastic Weight Consolidation) and Grok 3 Filtering.

Now that’s a whole long list of breakdown. While this long-term memory breakdowns explains more of human information processing and how it advances modern AI systems, this sets you on the foundation to understand any modern day AI system and more upgraded trends coming.

8. Short-Term Memory(STM):

In human cognitive system, this memory is responsible for shallow or easily forgettable task which only last during the period of contextual engagement. This same approach is mimicked in modern AI systems. especially AI agents to hold conversational context.

Now this is when things get interesting. The information in the short-term memory may diffuse into the model's long-term memory through a process called memory consolidation. This process happens through prolonged and repeated task execution. A reason why you can type without looking at your keyboard is because you have practiced repeatedly over a long-time. At first, you couldn't even memorized the keyboard alphabets. This example proofs a transition in your cognitive thinking for typing on keyboards from your short-term to long-term memory.

Also, in your life experience, you might remember you realized certain things, with observations without reading in a book. This is answerable to your natural creativity and intelligence. This same process is mimicked in AI systems by integrating information into distributed storage sites. A reason we have Generative AI model is due to model diffusion(a process information in model context, model parameters and embedded information links to form new neural patterns).

From a security point of view, you realized that LLM models hallucinates most times. This is due to wrong integration and linking of neural networks, hence, leading to outputs which needs correction. Model diffusion probabilistically leads to new correct findings(discovery) or new incorrect findings(hallucinations) related to human research.

Output & Learning

9. Response Execution & Executors(Decoder/Actuators/Text-Image-Audio-Video Generators):

This is the final model component which transmits the internal representation into actions based on the results of the system processes involving perception, decision selection, working memory, short-term memory and long-term memory coordinated by attention resources.

Although this reflects to human physical or non-physical reactions to stimuli, in AI systems, response executions are carried out in different mannerism based on the type of model system:-

- Large Languages Models: Response execution is carried out by LLMs through text generation, by generating token which is a result of motor response. These are carried out by components such as decoder head and output layers.

- Robotics/Embodied AI: In robotic AI systems, this is carried out by physical actuations. These actions are enhanced by motor controllers and actuator drivers.

- Reinforcement Learning Agents: The response execution is noticed in this system through agents sending out action to environment interface through components such as action interface and policy output module.

- Generative Models: In generative models, response renderer, decoder and diffusion sample convert latent representation into sensory data(image, audio and video).

- Multi-Agent or API Driven AI Systems: In multi-agentic systems, response execution is carried out by tools and API invokers.

10. Feedback/Learning(Loss Function/Reward Signals/Contrastive Loss):

In human cognitive model, the performance outcome to stimuli influence your future behaviors to the same or similar stimuli. This same concept holds true in AI systems. Leveraging loss functions and reward signal for every output based on the learning paradigm.

A Loss function in AI feedback system quantifies how wrong the model prediction is compared to the target output. in RL(Reinforcement Learning) systems, Loss function is replaced by Reward Signals which indicates how good an action was rather than measuring error.

Although loss function and reward signal are common in supervised learning and RL AI systems, contrastive loss is applied in self-supervised or unsupervised learning AI systems. AI models systems such as CLIP, SimCLR, GPT-5 embedding pretraining etc. It allows models to learn meaningful representations by comparing positive(similar) and negative(dissimilar) data pairs. It doesn't rely on explicit labels but generates pseudo-supervision through data augmentation and multi-modal alignments.

Conclusion

If you've made it this far, congrats! You've finally finished the first phase exploration of the secret of modern AI systems, from the foundational perspective of cognitive psychology inspired from Wicken's information processing model.

This journey isn't a sprint, rather a marathon. There are a whole lots of undiluted knowledge insights in this article inspired from research papers, articles, videos and other resources based on cognitive psychology, artificial intelligence and real world experience from interacting with different AI models.

I suggest you visit this article from time to time as reference when building and trying to reverse engineer or break AI systems.

Ready to dive deeper? Check out Part 2: Mathematical Components, Limitations & Attack Vectors where we explore the powerful mathematical foundations (linear algebra, calculus, probability theory, and statistics) that power—and compromise—AI systems.

Ready to Secure Your AI Systems?

Now that you understand the cognitive foundations and attack vectors in AI models, you might be wondering: "How do I actually audit and secure my AI systems in practice?"

At Zealynx, we specialize in comprehensive AI security assessments that go beyond traditional smart contract audits. Our team applies the cognitive security framework you've just learned, plus advanced mathematical analysis—to identify vulnerabilities in:

- LLM Applications - Prompt injection, context manipulation, data extraction

- AI Agent Systems - Multi-modal attacks, tool misuse, privilege escalation

- ML Pipeline Security - Training data poisoning, model extraction, adversarial inputs

- AI Infrastructure - API security, access controls, deployment vulnerabilities

What makes our AI audits different:

- Deep understanding of cognitive attack vectors (like those covered in this series)

- Mathematical analysis of model behaviors and failure modes

- Practical remediation strategies tailored to your AI architecture

- Ongoing security monitoring and threat intelligence

FAQ: Key Concepts in AI Security and LLMs – Part 1

1. What is a cognitive model in artificial intelligence?

A cognitive model in AI refers to a computational framework inspired by how humans think, learn, and process information. These models aim to mimic human cognition—like perception, memory, and decision-making—to make AI systems more flexible and intelligent.

2. How does data quality impact the performance and security of AI models and LLMs?

High-quality, well-labeled data allows AI models and LLMs to make accurate predictions and reduces the risk of unexpected or insecure behavior. Poor data quality can lead to errors, vulnerabilities, and unpredictable outputs, making models easier to exploit.

3. What are attention mechanisms in large language models (LLMs)?

Attention mechanisms enable LLMs to focus on the most relevant parts of input data when generating responses. This helps models handle complex language tasks more effectively, but flaws in attention can lead to errors or security weaknesses.

4. What is the difference between short-term memory and long-term memory in AI systems?

Short-term memory in AI temporarily holds recent information for immediate tasks, while long-term memory stores knowledge and skills learned over time. This distinction helps models process context and retain important information, similar to how humans remember things.

5. How do neural networks process input data in AI and LLMs?

Neural networks process input data by passing it through multiple layers, each transforming the data with mathematical operations. This layered approach allows AI models to extract features, recognize patterns, and generate outputs from raw data.

6. What are common vulnerabilities in AI models and large language models?

Common vulnerabilities include exposure to adversarial inputs, data poisoning, model inversion attacks, and weaknesses in attention mechanisms. These can lead to incorrect outputs, data leaks, or unintended model behavior.

7. What is model hallucination in AI, and why does it happen?

Model hallucination occurs when an AI model generates outputs that are plausible but factually incorrect or nonsensical. This often happens due to gaps in training data, ambiguous prompts, or limitations in the model’s architecture.

8. What does reverse engineering mean in AI security?

In AI security, reverse engineering involves analyzing and deconstructing AI models or systems to understand their inner workings, identify vulnerabilities, and find potential attack vectors.

Glossary

| Term | Definition |

|---|---|

| LLM | Large Language Model — AI systems trained on vast text data to understand and generate language. |

| Attention Mechanism | Neural network component enabling models to focus on relevant parts of input data. |

| Context Window | Maximum amount of text an LLM can process in a single interaction. |

| Neural Network | Computational system of interconnected nodes that learns patterns from data. |

| Embedding | Dense vector representation of data where similar items are positioned near each other. |