Back to Blog

AuditWeb3 Security

The Pre-Audit Checklist: How to Save 30% on Your Smart Contract Audit

Smart contract audits are likely the single largest line item in your engineering budget. If you are a Lead Engineer or CTO in Web3, you know the drill: you pay a premium for top-tier security researchers, often waiting months for a slot.

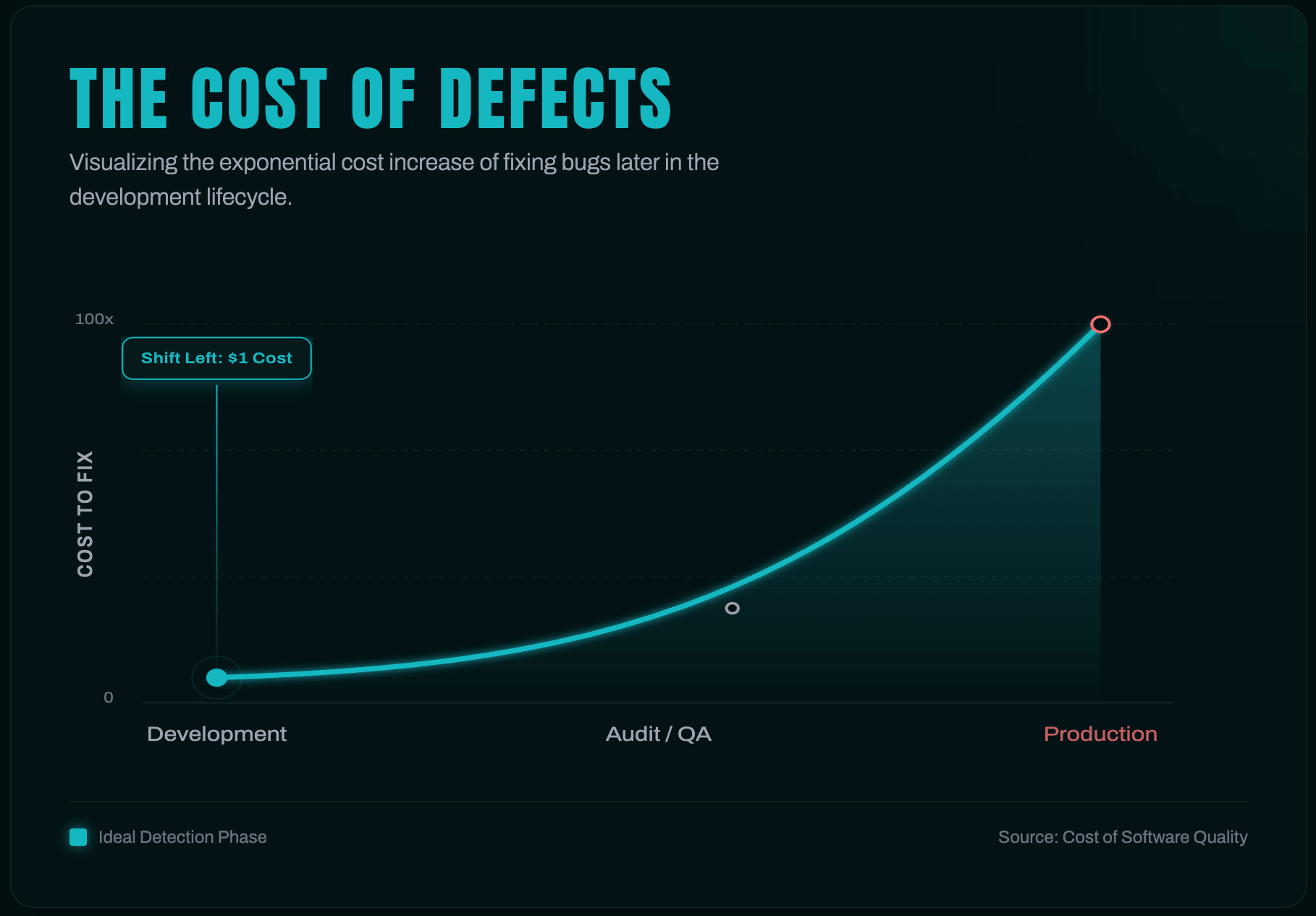

But there is a hidden inefficiency in this model. A significant portion of audit costs—conservatively estimated at 30%—is essentially a tax on unpreparedness.

When you hand over a raw codebase, you force highly paid auditors (charging upwards of $1,500/day) to perform low-level tasks: deciphering undocumented architecture, mapping variable names, or flagging syntax errors that a free linter could have caught. This increases "discovery time," drags out the engagement, and balloons the final bill.

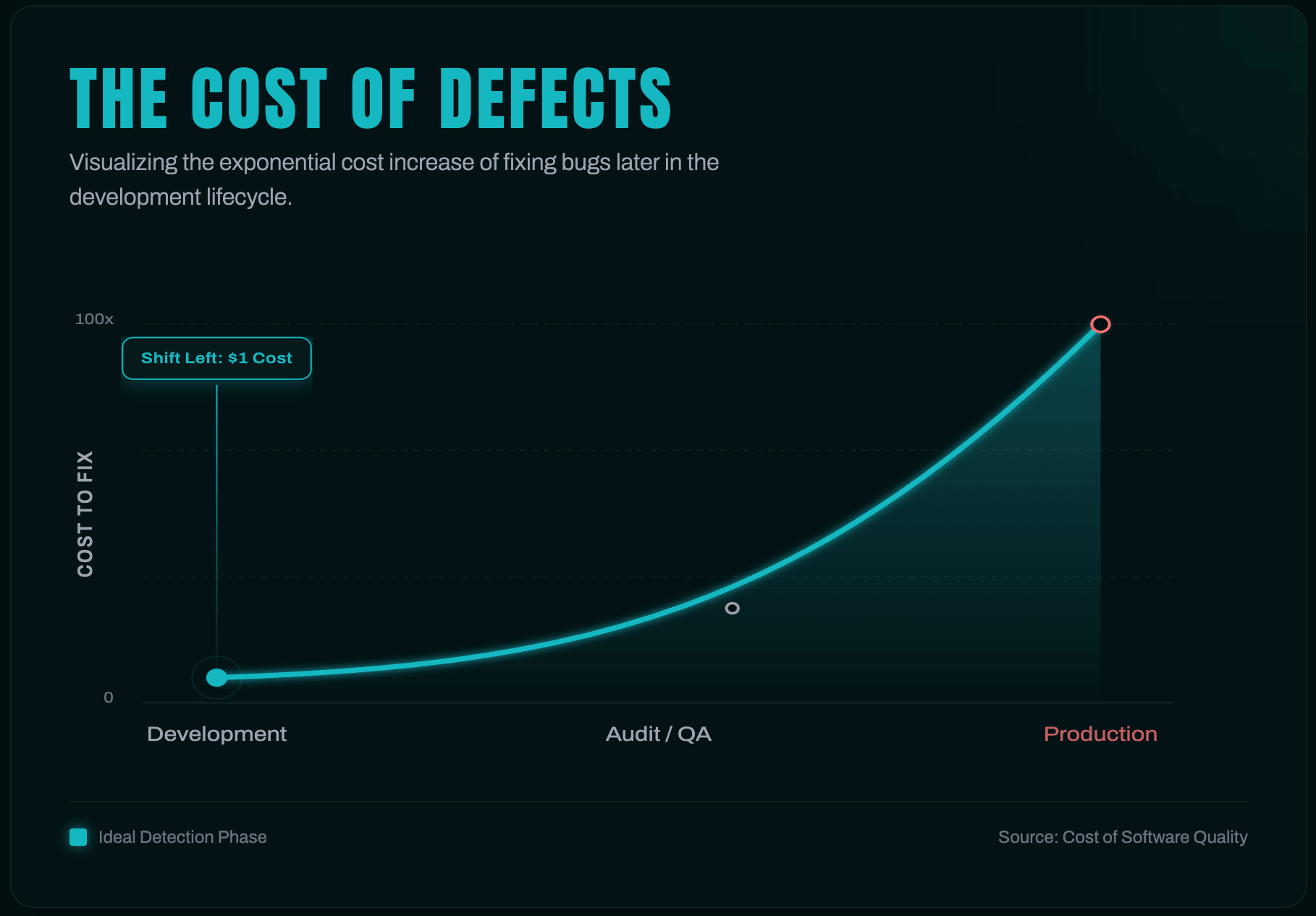

Your goal is to shift security "left." By presenting a sanitized, documented, and rigorously tested codebase, you allow auditors to skip the busy work and focus immediately on high-value logic verification.

Here is the technical checklist to de-risk your deployment and optimize your audit spend.

Phase 1: Strategic documentation

The most leveraged activity for cost reduction is defining the "Theory of Operation." If the auditor has to reverse-engineer your intent from the code, you are paying for them to guess.

Implement Strict NatSpec: Standardize inline documentation. Every public and external function requires

@notice, @param, and @return tags.Why: It highlights the "Intent vs. Implementation" gap. If the comment says "Deducts 1% fee" but the code calculates 0.1%, the auditor catches it instantly. NatSpec is the industry standard for this documentation.

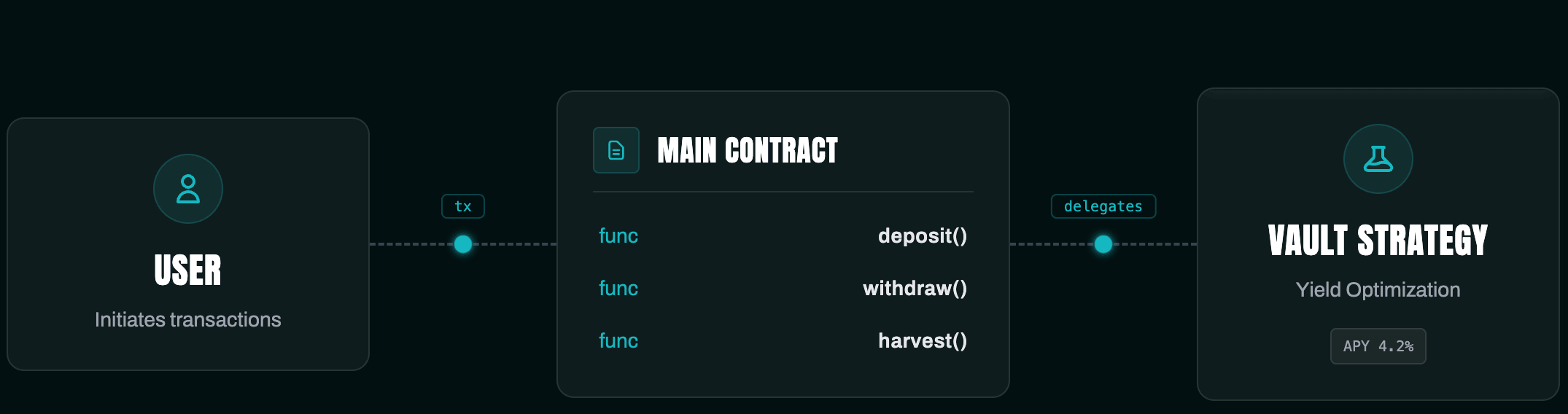

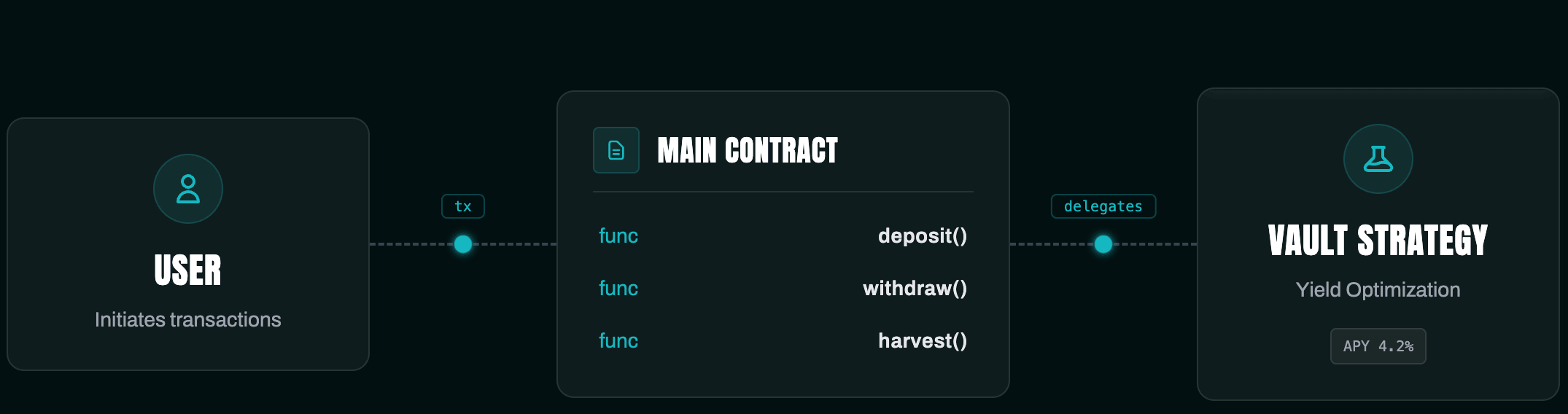

Visualize the Architecture: Don't make auditors mentally map your system. Provide:

- Call Graphs: To identify reentrancy vectors.

- State Machine Diagrams: Essential for governance or complex lifecycle protocols.

- Inheritance Trees: To visualize dependency hierarchies.

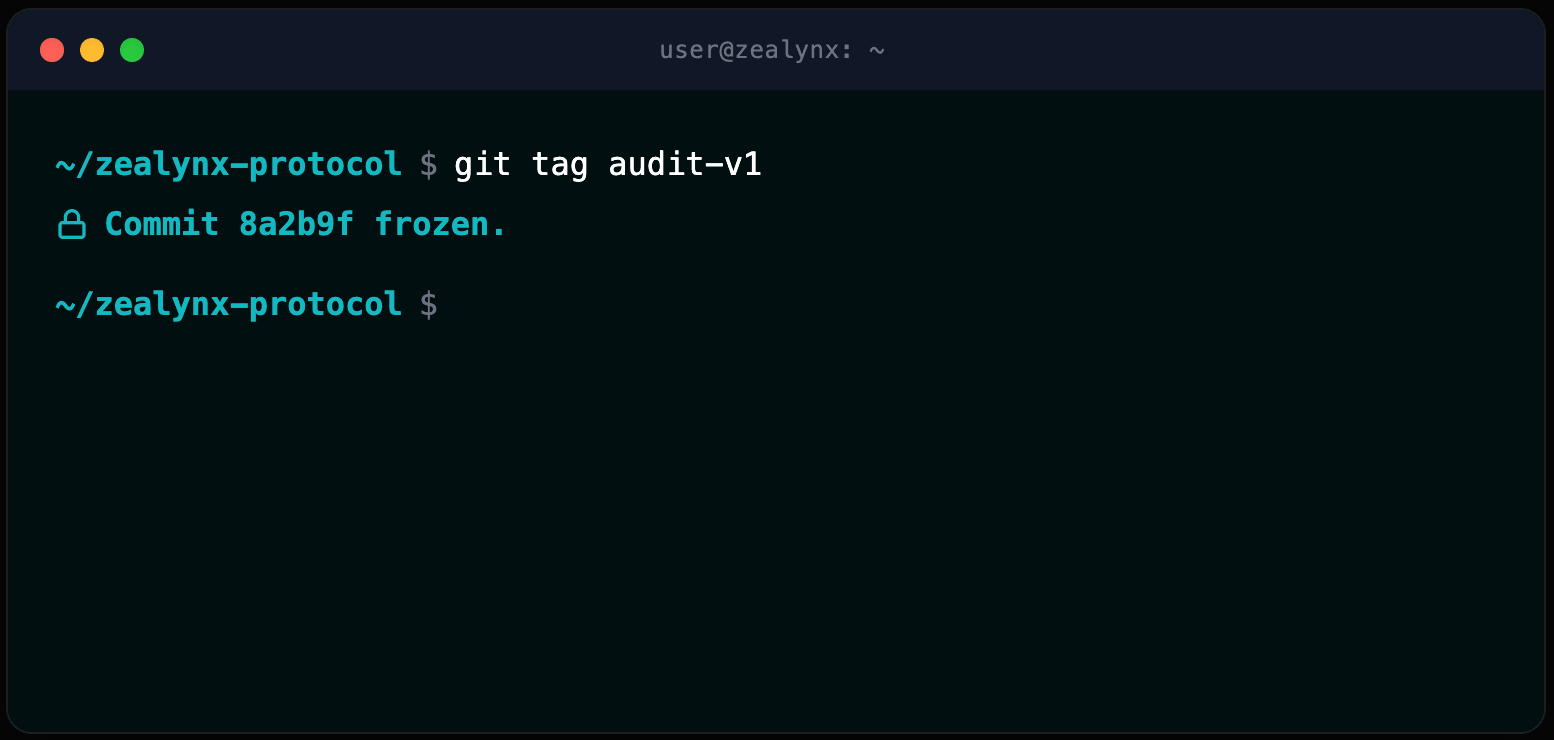

Define the "Frozen Commit": Tag a specific git commit hash (e.g.,

git tag audit-v1) and do not touch it. Pushing "quick fixes" shifts line numbers and invalidates the auditor’s ongoing notes, leading to frustration and "diff review" surcharges.

Phase 2: Codebase hygiene

Before the house appraisal, you clean the house. A "noisy" codebase obscures vulnerabilities and signals carelessness.

Enforce Linter Compliance: Use Solhint or Prettier-Solidity. If an auditor has to point out styling inconsistencies, you are wasting their time. The codebase should look standard so they can scan it rapidly.

Eliminate Dead Code: Prune unused variables, commented-out blocks, and test files from the production build.

- Risk: Dead code can sometimes be "woken up" by attackers if internal functions are exposed.

- Cost: Auditors must read every line to confirm it is dead.

Lock Dependencies: Avoid floating pragmas (e.g.,

^0.8.0). Pin your compiler version (e.g., 0.8.19) and lock dependencies in package.json to ensure the audited bytecode matches the deployed bytecode exactly.Phase 3: The testing gauntlet

Low test coverage is the primary indicator that a project is not audit-ready.

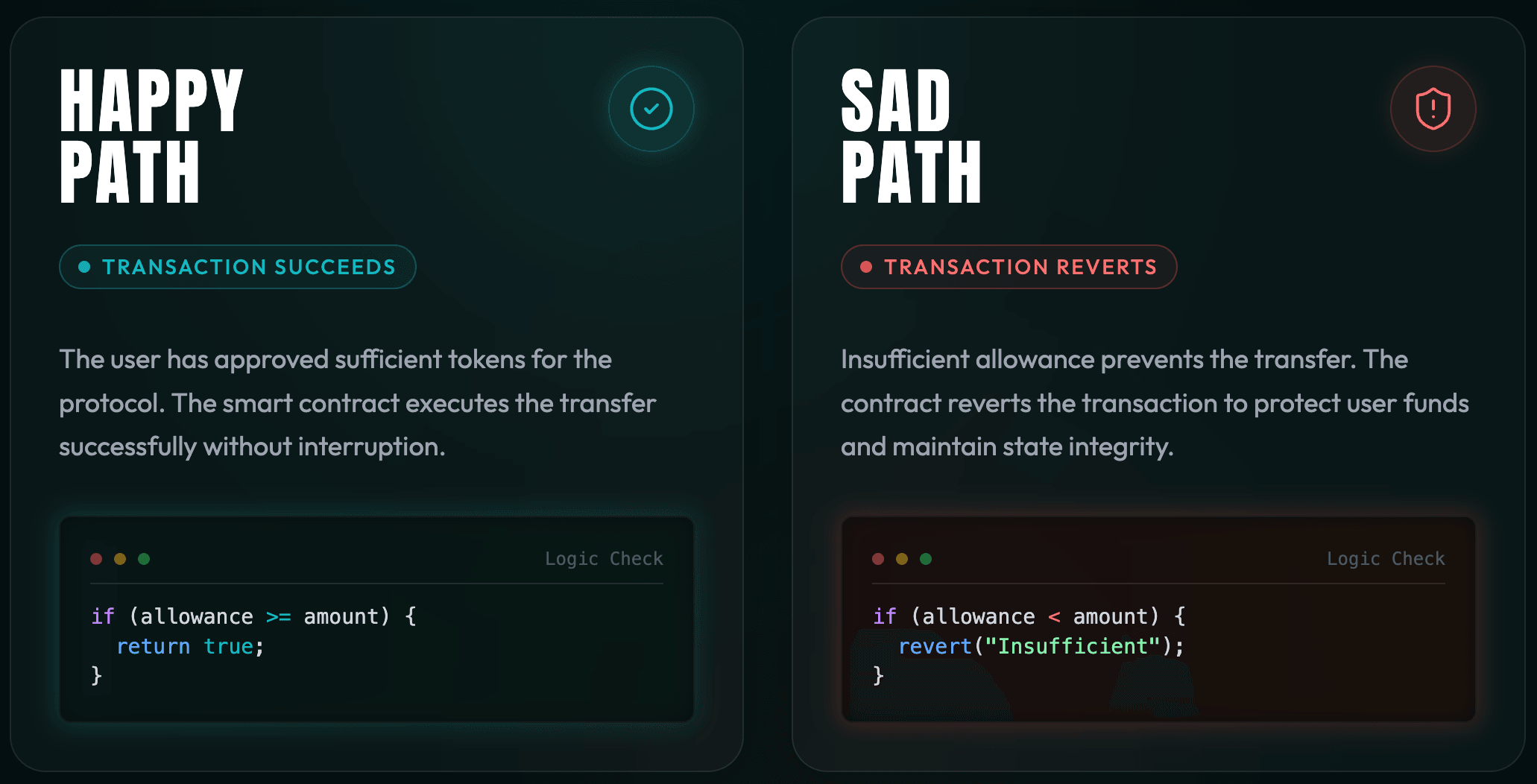

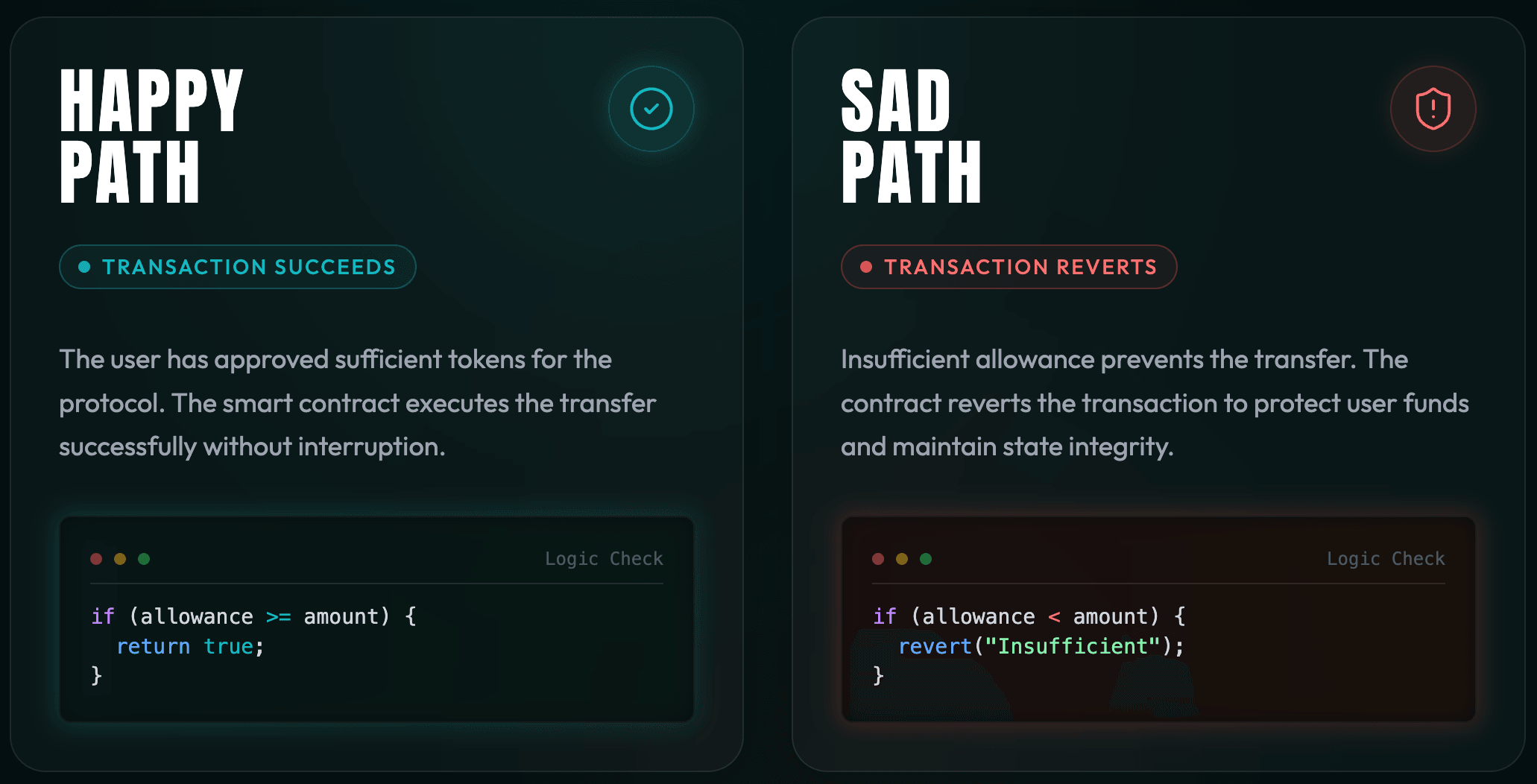

Branch Coverage (The "Sad Path"): Testing the "Happy Path" is insufficient. You must test that the contract reverts when it is supposed to. Ensure every

require statement is triggered in a test case.

Run Static Analysis (Slither): Slither is the industry standard for Python-based static analysis. It takes seconds to run.

The Rule: Fix every warning or document exactly why it is a false positive. Handing over code with 50+ Slither warnings forces the auditor to wade through noise.

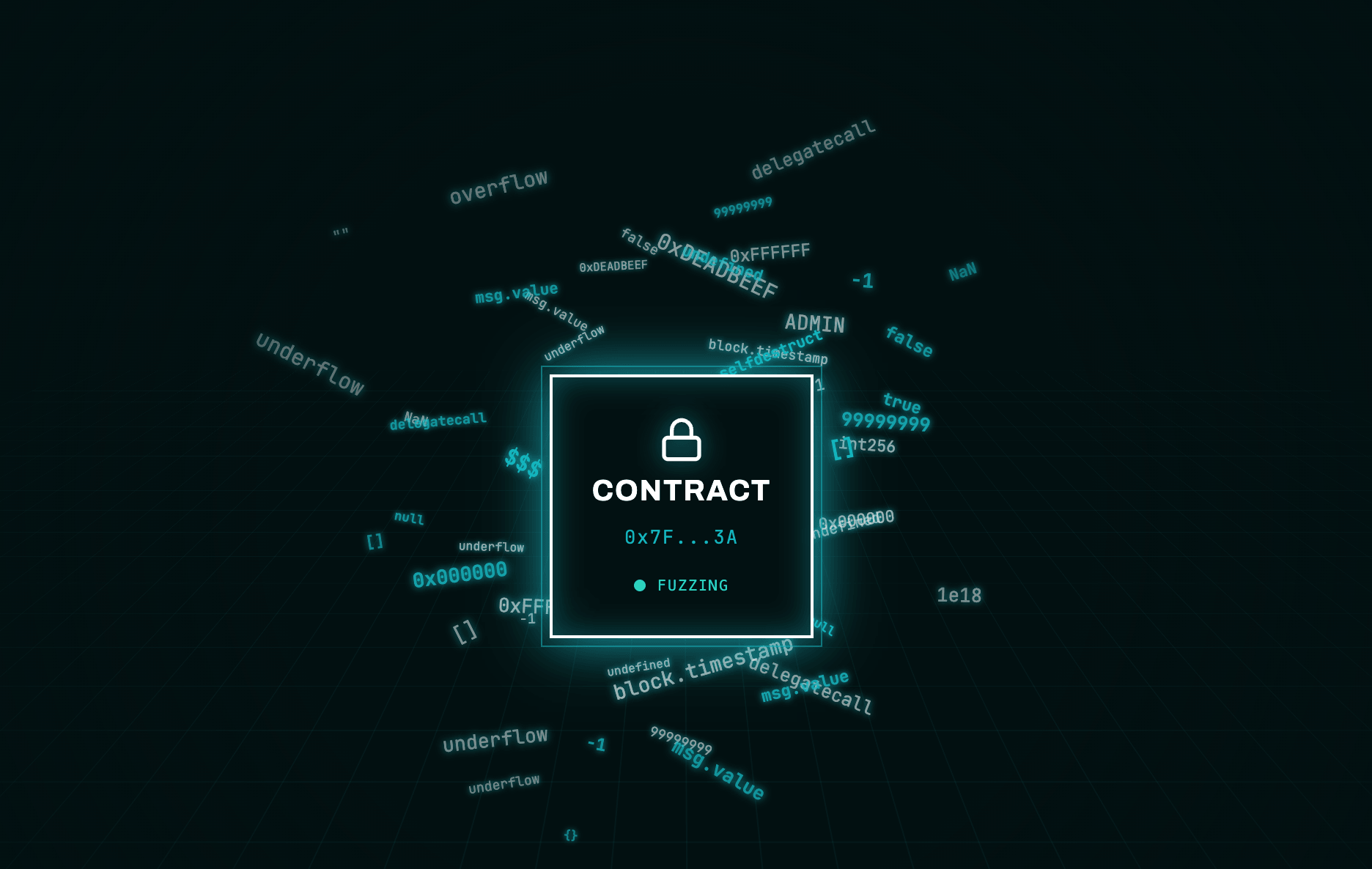

Fuzz Testing (Property-Based): Unit tests verify known logic; fuzzers find "unknown unknowns". Use tools like Foundry (Forge) or Echidna to define invariants (e.g.,

total_deposits == total_withdrawals + current_balance) and blast the contract with random inputs.

Phase 4: Operational logistics

The Video Walkthrough: Record a 30-minute loom where the lead developer explains the architecture and flow of funds. This compresses the auditor's learning curve from days to hours.

Define Scope Explicitly: Create a

scope.txt listing specific files to check. Explicitly exclude mock contracts, deployment scripts, and unmodified third-party libraries (like OpenZeppelin).Summary checklist

| Category | Action Item | Readiness Target |

|---|---|---|

| Docs | Technical Whitepaper | Formulas & Threat Model defined (LaTeX) |

| Docs | NatSpec | 100% of public interfaces annotated |

| Code | Linting | Zero errors (Solhint/Prettier) |

| Tests | Coverage | >95% Branch Coverage |

| Tests | Fuzzing | 24h+ run with no failures |

| Tools | Static Analysis | Slither report clean (or documented) |

| Ops | Freeze | Commit Hash identified & tagged |

Your next step

Don't wait for the audit to find the "low hanging fruit."

Action: Install Slither locally today and run it against your core contracts. It takes less than 5 minutes. If it flags issues you haven't seen, you aren't ready for an audit yet. Fixing these now costs you nothing; fixing them during the audit costs you billable hours.

Partner with Zealynx

At Zealynx, we specialize in helping protocols prepare for and undergo rigorous security audits. Whether you need a pre-audit review to clean up your codebase or a full-scale security engagement, our team ensures your protocol is secure, optimized, and ready for deployment.

FAQ: Pre-Audit Preparation

1. How long before my token launch should I schedule a smart contract audit?

Schedule your audit at least 6-8 weeks before your planned launch. This accounts for 2-3 weeks of preparation (implementing this checklist), 2-3 weeks for the actual audit, and 1-2 weeks for remediation and re-review. Rushing an audit by scheduling it 1-2 weeks before launch forces auditors to work faster (increasing costs and potentially missing issues) and leaves no buffer for critical findings that require architectural changes.

2. Can I make changes to my smart contract during the audit?

No. Once the audit begins, the codebase must be frozen at a specific commit hash. Any changes—even small bug fixes—invalidate the auditor's ongoing work by shifting line numbers and changing logic they've already reviewed. This triggers expensive "diff reviews" where auditors must re-verify everything. If you discover a critical issue during the audit, document it for the remediation phase that happens after the initial audit report is delivered.

3. Is passing Slither (static analysis) enough to ensure security?

No. Slither is a baseline hygiene tool that catches known, common vulnerabilities (like reentrancy or uninitialized variables). It does not understand your protocol's specific business logic or economic invariants. Passing Slither is the minimum requirement to be ready for an audit, but it is not a substitute for a human audit or property-based fuzz testing.

4. What test coverage percentage do I need for a smart contract audit?

Aim for 95%+ branch coverage, not just line coverage. Branch coverage ensures you test both the success case ("happy path") and failure cases ("sad path") for every

if/else and require statement. Many protocols mistakenly target 90% line coverage, which only checks that code runs, not that error conditions properly revert. Auditors will flag untested branches as potential vulnerabilities since unhandled edge cases are a common exploit vector in DeFi.5. What is NatSpec and why do auditors require it?

NatSpec (Natural Specification) is Solidity's standard documentation format using tags like

@notice, @param, and @return above functions. Auditors require it because it reveals the "Intent vs. Implementation" gap—if your comment says "Deducts 1% fee" but your code calculates amount * 100 / 10000 (0.1%), the auditor immediately spots the bug. Without NatSpec, auditors must reverse-engineer your intent from code alone, which dramatically increases discovery time and audit costs.6. What are floating pragmas and why should I lock my Solidity version?

A floating pragma (e.g.,

pragma solidity ^0.8.0;) allows the compiler to use any version from 0.8.0 to 0.8.x. The risk is that the bytecode you audit might differ from the bytecode you deploy if a new compiler version is released between audit and deployment. Pinning the version (e.g., pragma solidity 0.8.19;) ensures deterministic compilation—the audited code and deployed code are byte-for-byte identical. This eliminates a class of deployment bugs and prevents "but it worked in the audit environment" issues.Glossary

| Term | Definition |

|---|---|

| Audit Scope | Defined boundaries of what code and functionality will be reviewed. |

| Invariant | Property that must always hold true throughout contract execution. |

| NatSpec | Ethereum's standard format for inline smart contract documentation. |