Back to Blog

2. The dependency trap: Cetus Protocol and the

The security landscape of 2025 shifted the goalposts for Web3 founders and product leads. While 2023 and 2024 were defined by complex DeFi logic exploits, 2025 saw a pivot toward infrastructure, supply chains, and the "presentation layer."

Total losses reached $3.4 billion. However, the most critical takeaway isn't the dollar amount—it’s the fact that many of these protocols had audited code. The failure points weren't in the smart contracts themselves, but in how those contracts interacted with the outside world.

The following analysis breaks down the most significant incidents of the year and the systemic vulnerabilities they exposed.

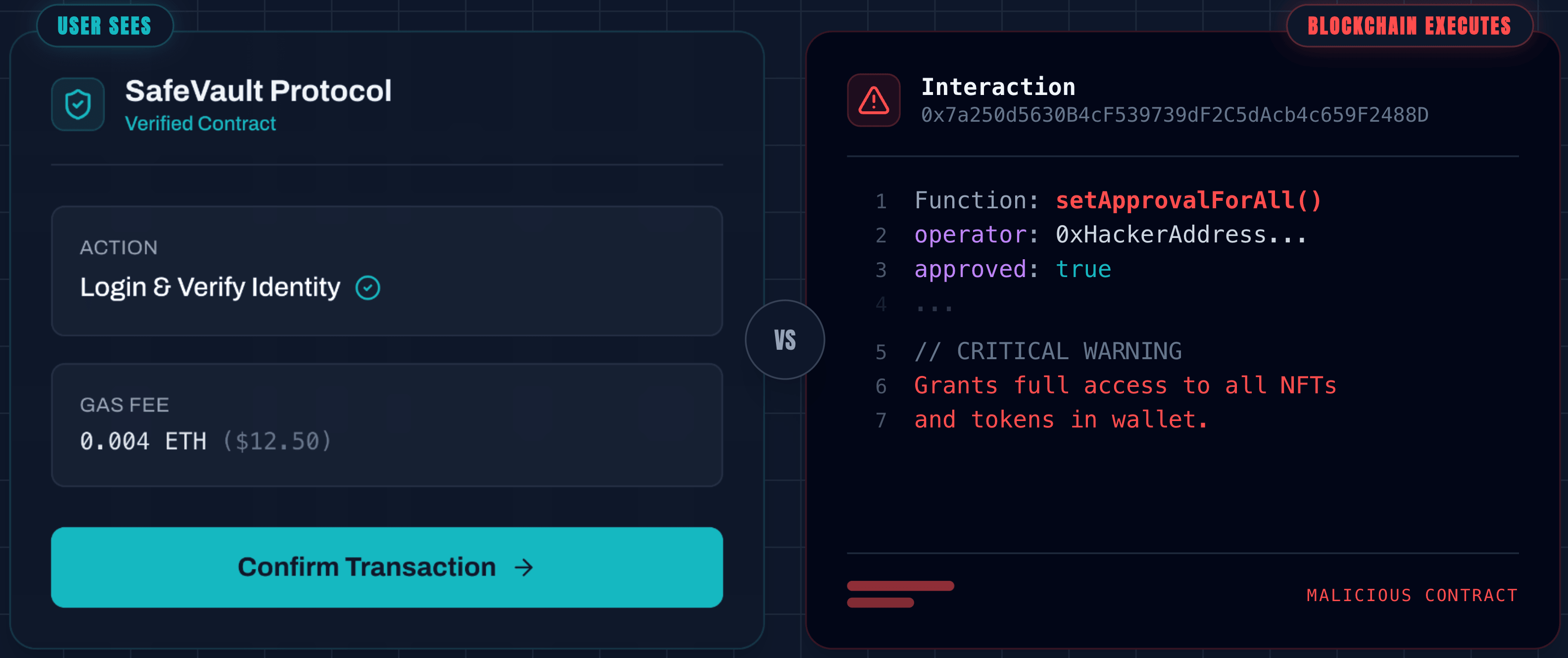

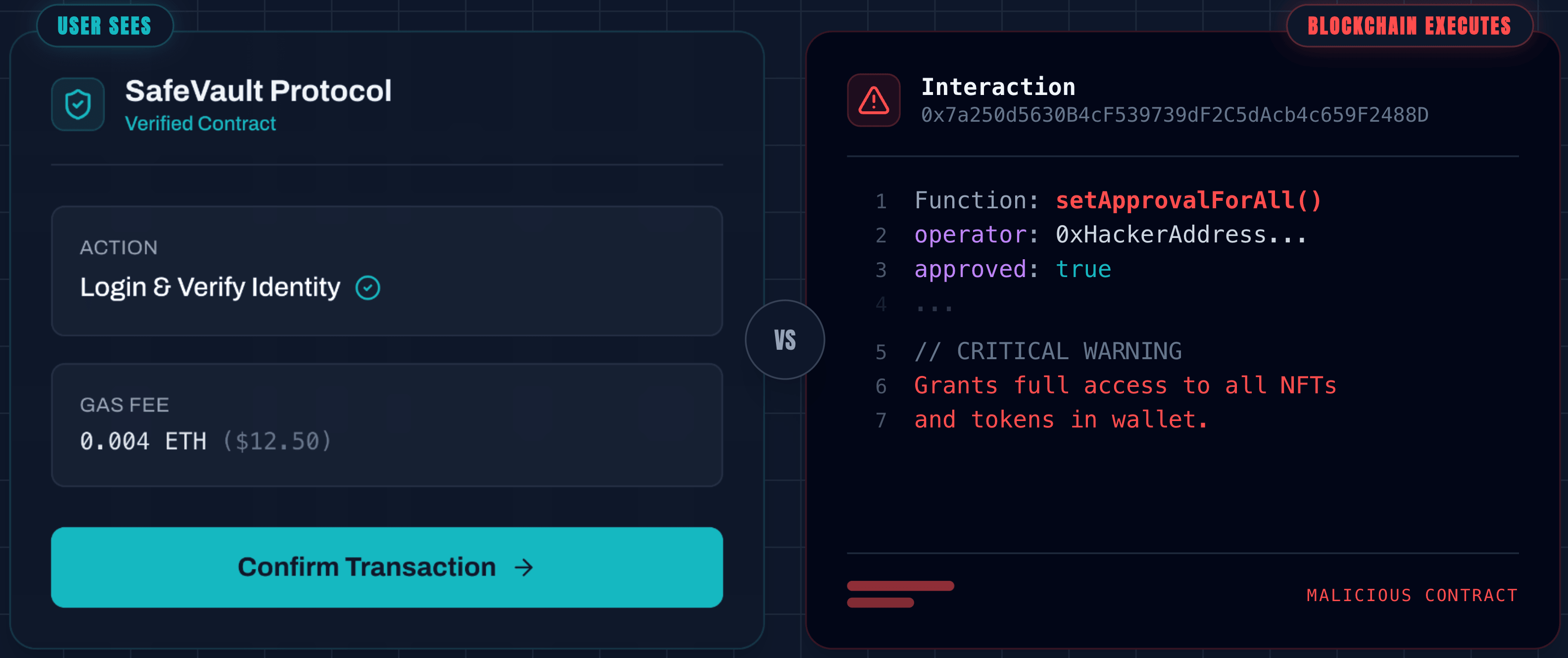

1. The presentation layer: the Bybit "blind signing" crisis

The Incident: In February 2025, Bybit lost approximately $1.5 billion. This wasn’t a flash loan attack or a reentrancy bug. It was a sophisticated UI injection. Attackers compromised the internal development pipeline via a malicious Docker container, eventually injecting a script into the AWS S3 buckets hosting the project’s Safe{Wallet} interface.

When executives went to sign routine transactions, the UI showed the correct destination and amount. In reality, the underlying hex data—the part the blockchain actually executes—had been swapped to redirect funds to the Lazarus Group.

The Lesson: Don't trust the interface. Even if you use hardware wallets and multi-sigs, your security is only as strong as the software rendering the transaction data.

- Founder Takeaway: Implement "Independent Transaction Verification." Your signers should use a secondary, air-gapped tool or a CLI to decode the transaction hex and verify it against the UI before signing.

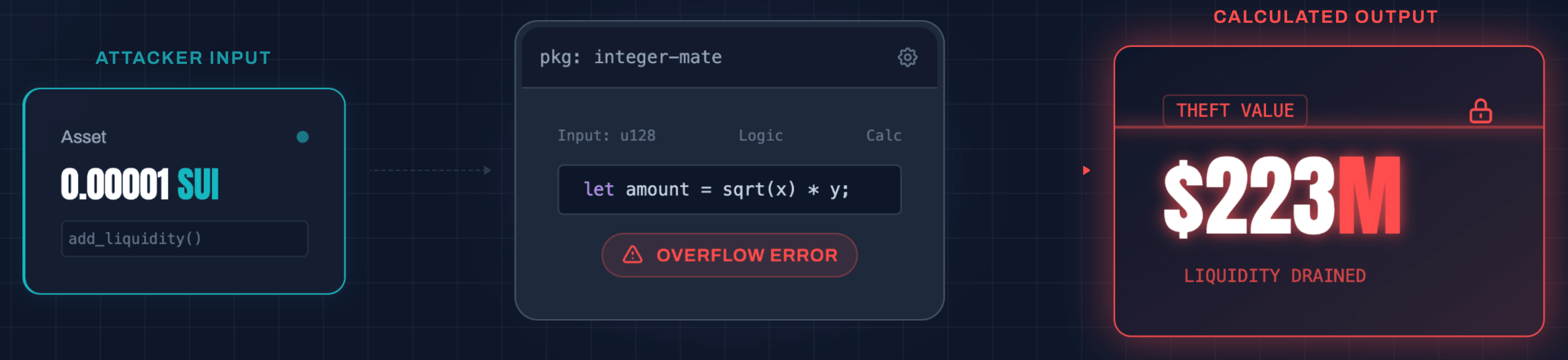

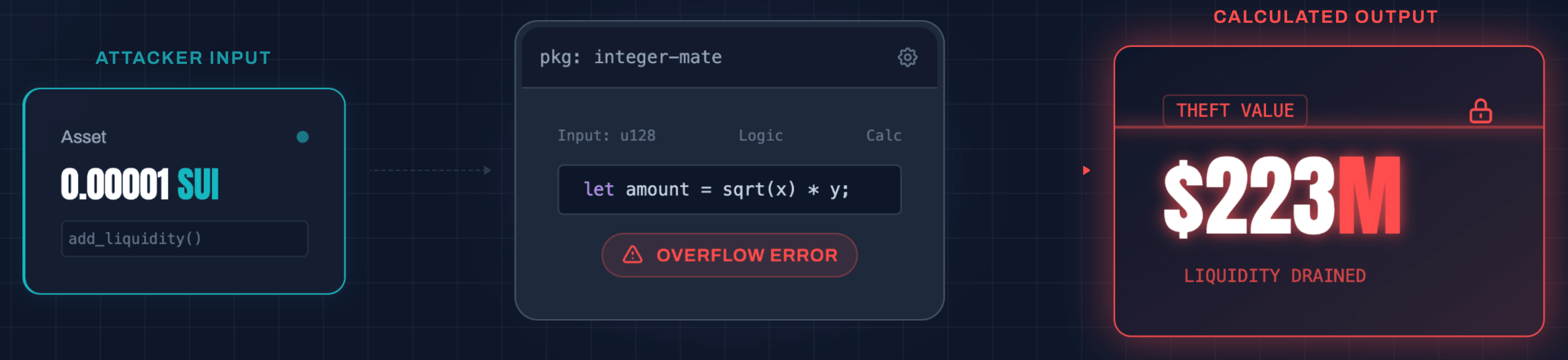

2. The dependency trap: Cetus Protocol and the integer-mate library

The Incident: Cetus Protocol, running on the Move-based Sui network, lost $223 million. Move is often marketed as "inherently secure," but this exploit proved that the language cannot protect against flawed third-party logic. A math library called

integer-mate had a bug in its overflow check (checked_shlw). This allowed an attacker to create a liquidity position that credited them with massive value for a deposit of nearly zero.

The Lesson: The "Safe Language" fallacy. A secure execution environment like Move or Rust doesn't prevent logic errors in imported libraries.

- Product Lead Takeaway: Audit your dependencies as rigorously as your core code. If your protocol relies on a third-party math or governance library, that library is now part of your attack surface.

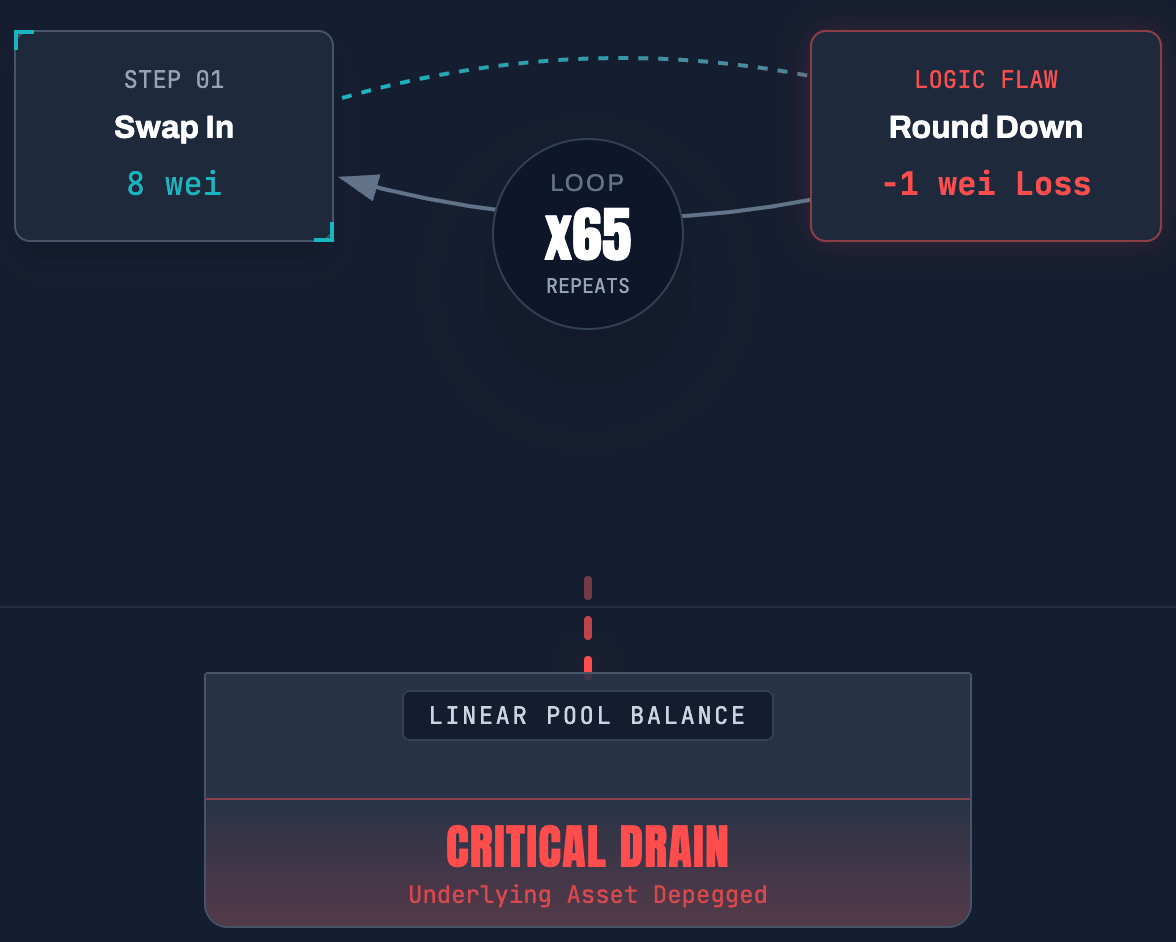

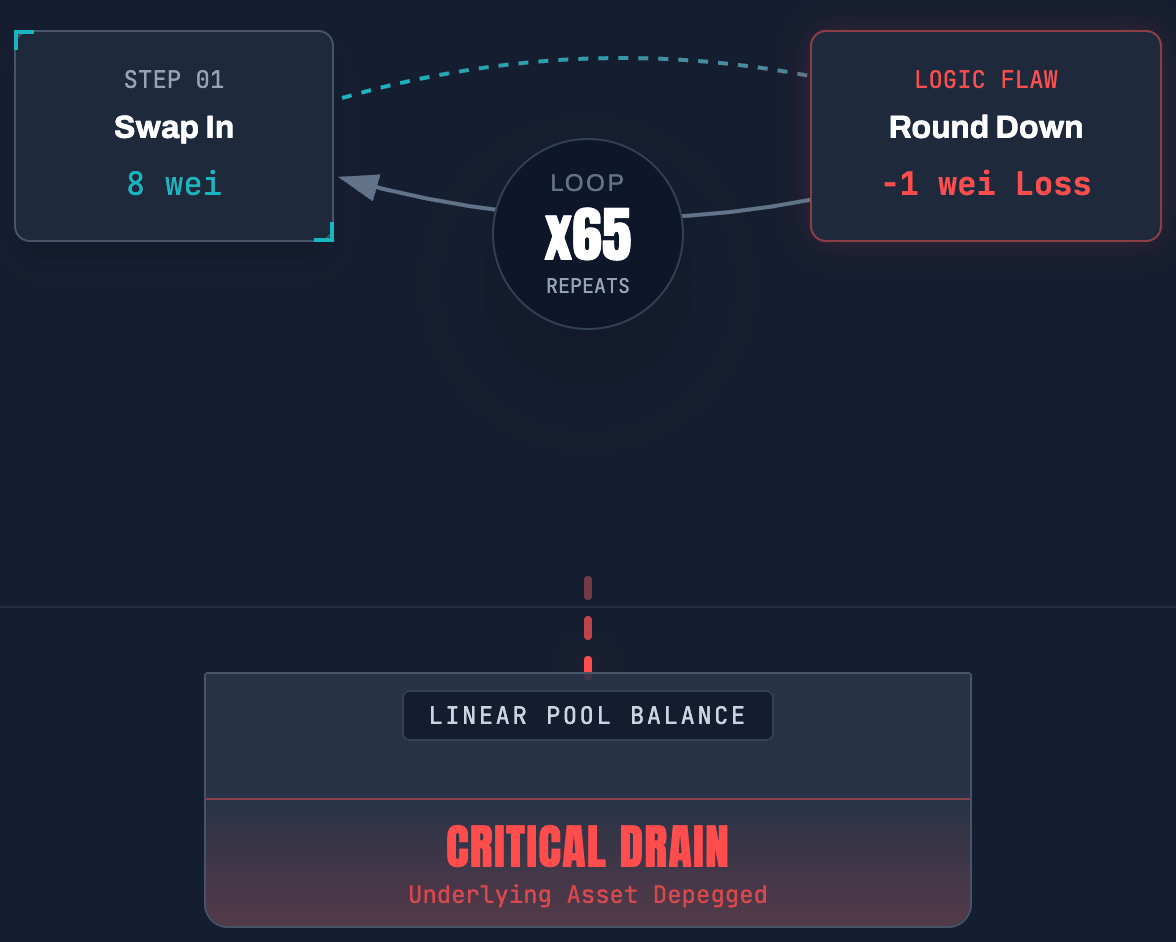

3. Precision fatigue: Balancer V2’s rounding errors

The Incident: In November, Balancer V2 suffered a $128 million loss across multiple chains. The vulnerability resided in the

mulDown function of its Stable Pools. By manipulating pool balances down to microscopic levels (8-9 wei), the attacker caused Solidity’s integer division to lose significant precision. By repeating this "micro-swap" 65 times in a single atomic transaction, the attacker drifted the pool's invariant enough to drain the assets.

The Lesson: Economic edge cases are security flaws. Most unit tests don't check for behavior at 8 wei, yet that is exactly where the math breaks down.

- Project Lead Takeaway: Incorporate "Fuzz Testing" specifically for low-liquidity and high-slippage scenarios. Your protocol must remain mathematically sound even at the extreme edges of its decimal precision.

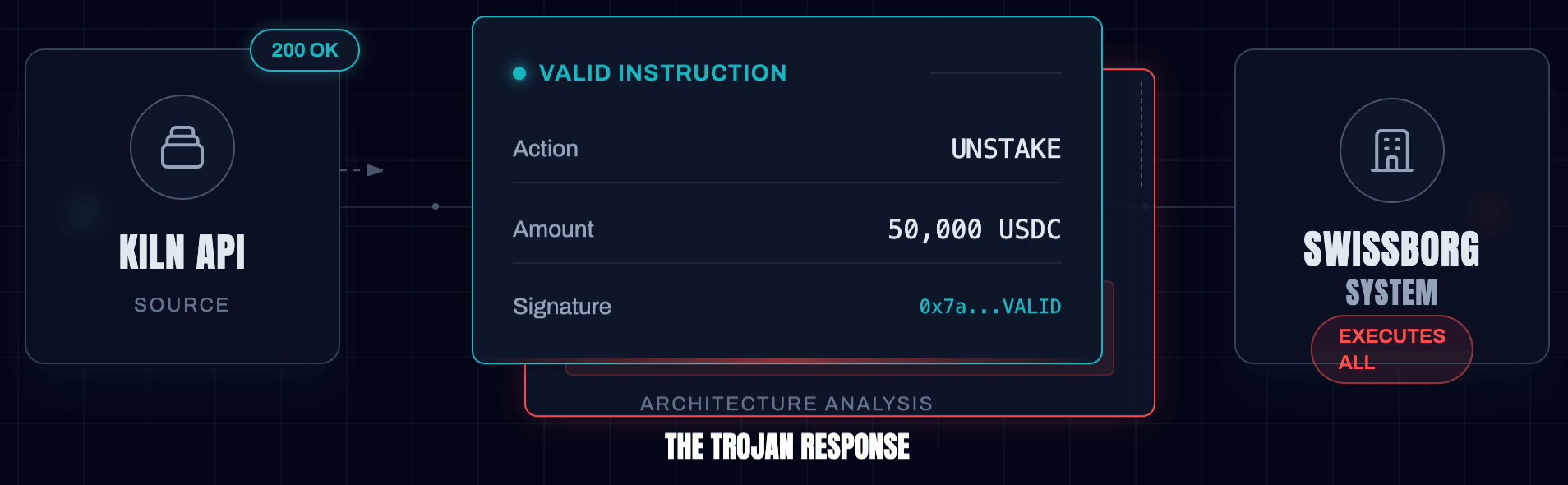

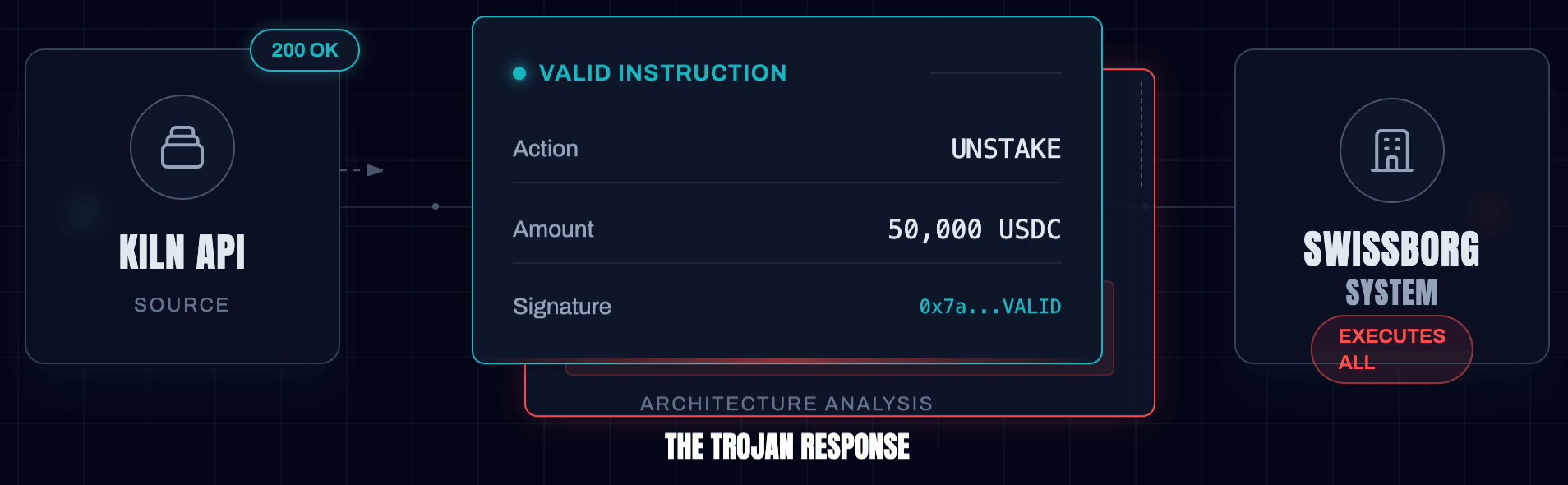

4. Supply chain fragility: SwissBorg and the Kiln API

The Incident: SwissBorg lost $42 million in SOL not because of their own code, but because of a compromised GitHub token at their staking provider, Kiln. The attacker injected a payload into the Kiln API that generated malicious transaction instructions. When SwissBorg’s systems requested a routine "unstake" command, the API returned a transaction that also included a "delegate authority" instruction, handing control of the accounts to the attacker.

The Lesson: API responses are untrusted inputs. If your protocol or platform automates transactions based on a third-party API, you have a single point of failure.

- Founder Takeaway: Treat every transaction generated by an external service as "tainted." Implement a middleware layer that parses transaction instructions and flags any unexpected commands (like unauthorized

ApproveorDelegate) before they reach the signer.

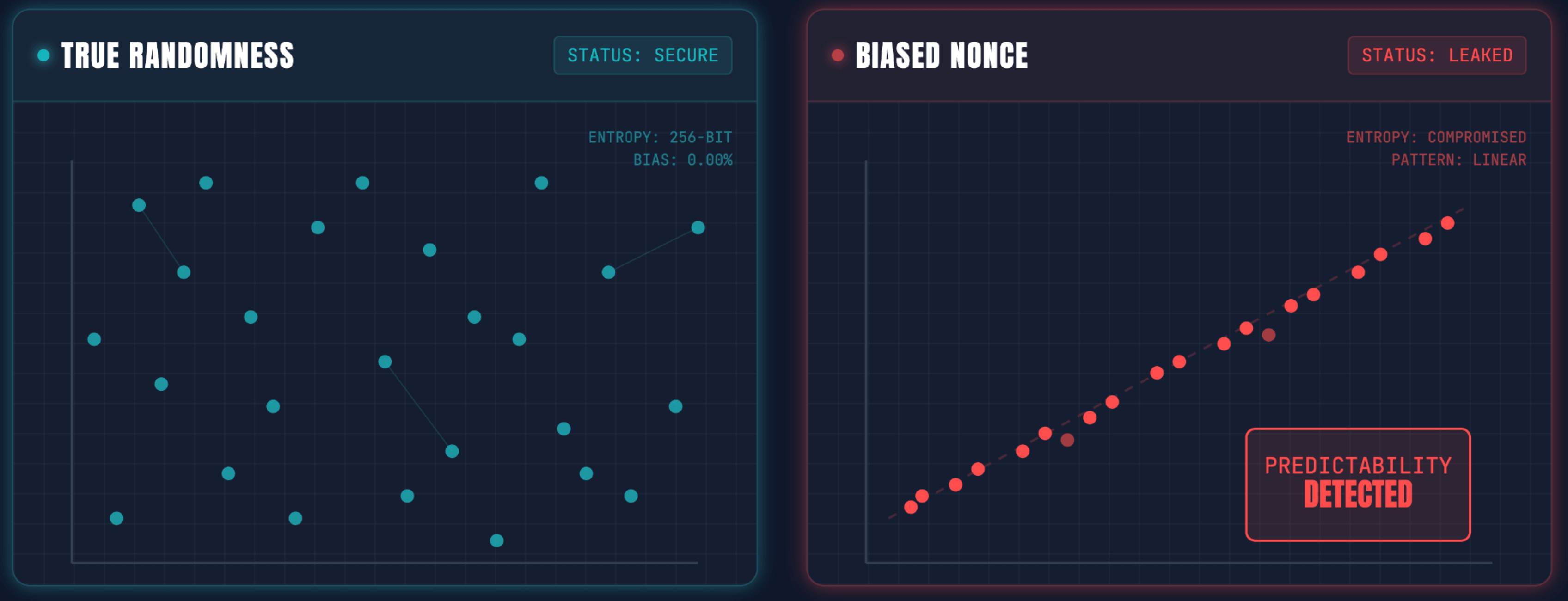

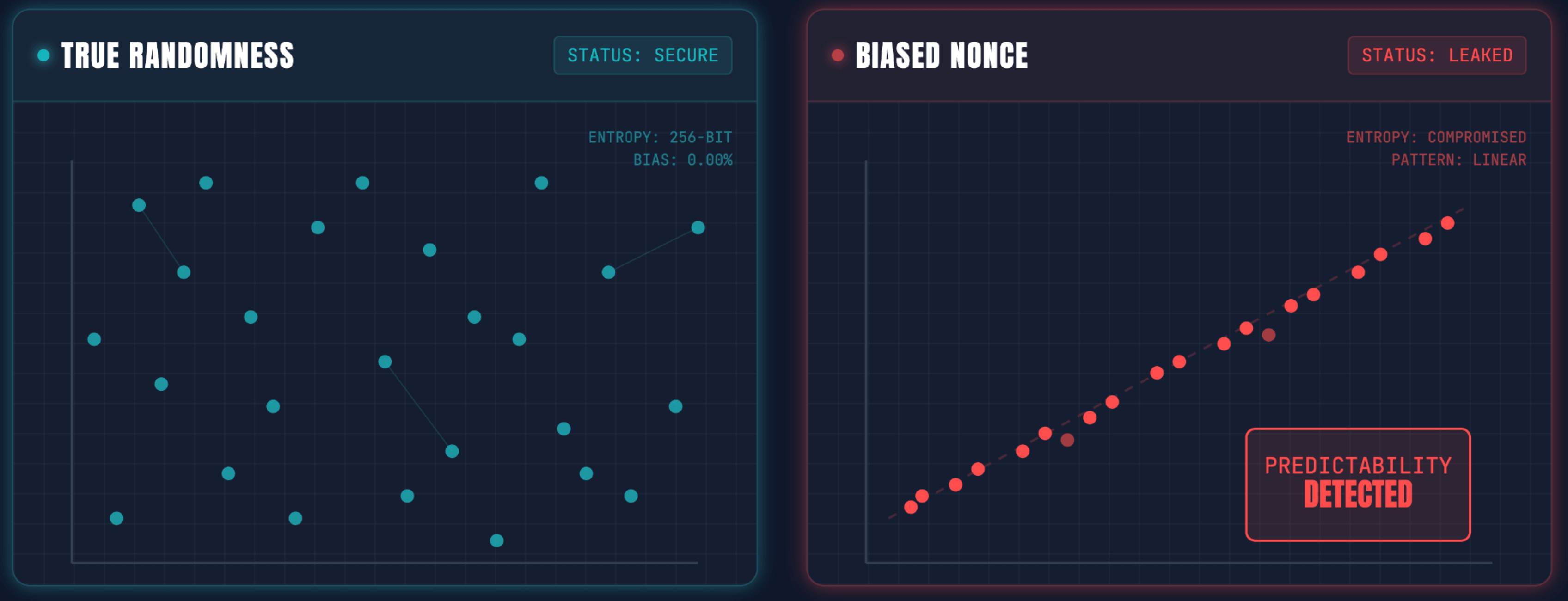

5. Cryptographic implementation: the Upbit nonce failure

The Incident: Upbit lost roughly $40 million due to a failure in their proprietary digital signature infrastructure. The system produced biased "nonces" (random numbers used in ECDSA signatures). By analyzing a history of valid signatures on the blockchain, attackers were able to mathematically derive the private keys offline.

The Lesson: Never "Roll Your Own" Crypto. Standard, peer-reviewed libraries (like OpenZeppelin or the official Elliptic curves) exist for a reason. Custom implementations often introduce subtle cryptographic weaknesses that aren't visible until it's too late.

- Technical Lead Takeaway: Standardize on battle-tested, open-source cryptographic libraries. If a custom solution is required for performance, it requires a specialized cryptographic audit, not just a standard smart contract review.

Conclusion: the move toward "defense in depth"

The hacks of 2025 demonstrate that the Smart Contract Audit is no longer a sufficient security box to check. We are entering an era of Systemic Security, where the infrastructure, the front-end, and the human signers are the primary targets.

Your Next Step:

Perform an "Internal Pipeline Audit." Instead of looking at your Solidity code, map out every tool and person that touches a transaction before it hits the mainnet—from the AWS S3 buckets hosting your UI to the GitHub tokens used by your dev team. If any one of those points can trigger a transaction, that is where your next exploit lives.

Get the DeFi Protocol Security Checklist

15 vulnerabilities every DeFi team should check before mainnet. Used by 35+ protocols.

No spam. Unsubscribe anytime.

Get in touch

At Zealynx, we understand that security goes beyond smart contracts. From infrastructure reviews to full-stack audits, we ensure every layer of your protocol is secure. Reach out to start the conversation.

FAQ: Web3 security lessons from 2025

1. What is "Blind Signing" and how can users prevent it?

"Blind Signing" occurs when a user approves a transaction based on what the UI displays, not verifying the actual underlying hex data. In the Bybit incident, the UI showed legitimate data, but the transaction sent to the blockchain was malicious. Users can prevent this by using independent hardware wallet verification or CLI tools to decode the raw hex data before signing.

2. Why are audits not enough to prevent exploits like Bybit's?

Standard smart contract audits focus on the code running on the blockchain (Solidity/Rust). They do not typically cover the "Presentation Layer" (front-end code, hosting infrastructure, DNS) or the internal development pipelines. Bybit was hacked via its CI/CD pipeline, an area outside the scope of a traditional protocol audit.

3. What makes "safe" languages like Move vulnerable?

Languages like Move and Rust offer memory safety and formal verification features that prevent many common bugs found in Solidity. However, they cannot prevent logic errors in third-party libraries. If a protocol imports an external library (like

integer-mate in the Cetus hack) that contains a flaw, the protocol inherits that vulnerability regardless of the language's safety features.4. How do rounding errors lead to massive losses in DeFi?

In DeFi, rounding errors (precision loss) might seemingly result in the loss of dust (minuscule amounts of value). However, if an attacker can force a calculation to round down consistently in their favor thousands of times within a loop or across massive volumes, they can drain the pool's liquidity. The Balancer V2 exploit utilized this "Precision Fatigue" to manipulate the pool's invariant.

5. What is a "Supply Chain" attack in Web3?

A supply chain attack targets the tools, libraries, or services a project relies on, rather than the project itself. The SwissBorg/Kiln incident is a prime example: attackers compromised a GitHub token at Kiln (a staking provider), which allowed them to inject malicious logic into the API that SwissBorg used, effectively compromising SwissBorg through a trusted vendor.

6. How can protocols secure their "Generation Layer" (nonces)?

The "Generation Layer" involves the cryptographic randomness used to generate keys and signatures. If the random number generator (RNG) is biased or predictable (as with Upbit's nonces), attackers can reverse-engineer private keys. Protocols must avoid "rolling their own crypto" and strictly use battle-tested, standard cryptographic libraries (like OpenZeppelin or standard language implementations) that have undergone specific cryptographic audits.

Glossary

| Term | Definition |

|---|---|

| Blind Signing | Approving blockchain transactions based on UI display without verifying the underlying transaction data. |

| Rounding Error | Precision loss in mathematical calculations that can be exploited to drain protocol funds. |

| Hardware Wallet | Physical device storing cryptocurrency private keys offline for enhanced security. |

| Nonce | Random number used once in cryptographic operations, critical for signature security. |

| UI Injection | Attack inserting malicious code into a user interface to manipulate transaction data. |

Get the DeFi Protocol Security Checklist

15 vulnerabilities every DeFi team should check before mainnet. Used by 35+ protocols.

No spam. Unsubscribe anytime.